How to upload a website's content into an OpenAI GPT's knowledge

Nov 20, 2023

OpenAI's new custom GPT's feature lets you upload files for it to use as knowledge. If you want to upload all the content from one or more websites, you first have to save all of the website's pages (known as crawling). UseScraper Crawl is a powerful and easy solution for crawling any website, be it 10 or 10,000 pages of content. After crawling a website, UseScraper lets you download the whole website as a text or markdown format file, which can be uploaded into your OpenAI GPT's knowledge.

Let's crawl a website

First signup for a free UseScraper account and you'll get $25 free credit to get you started.

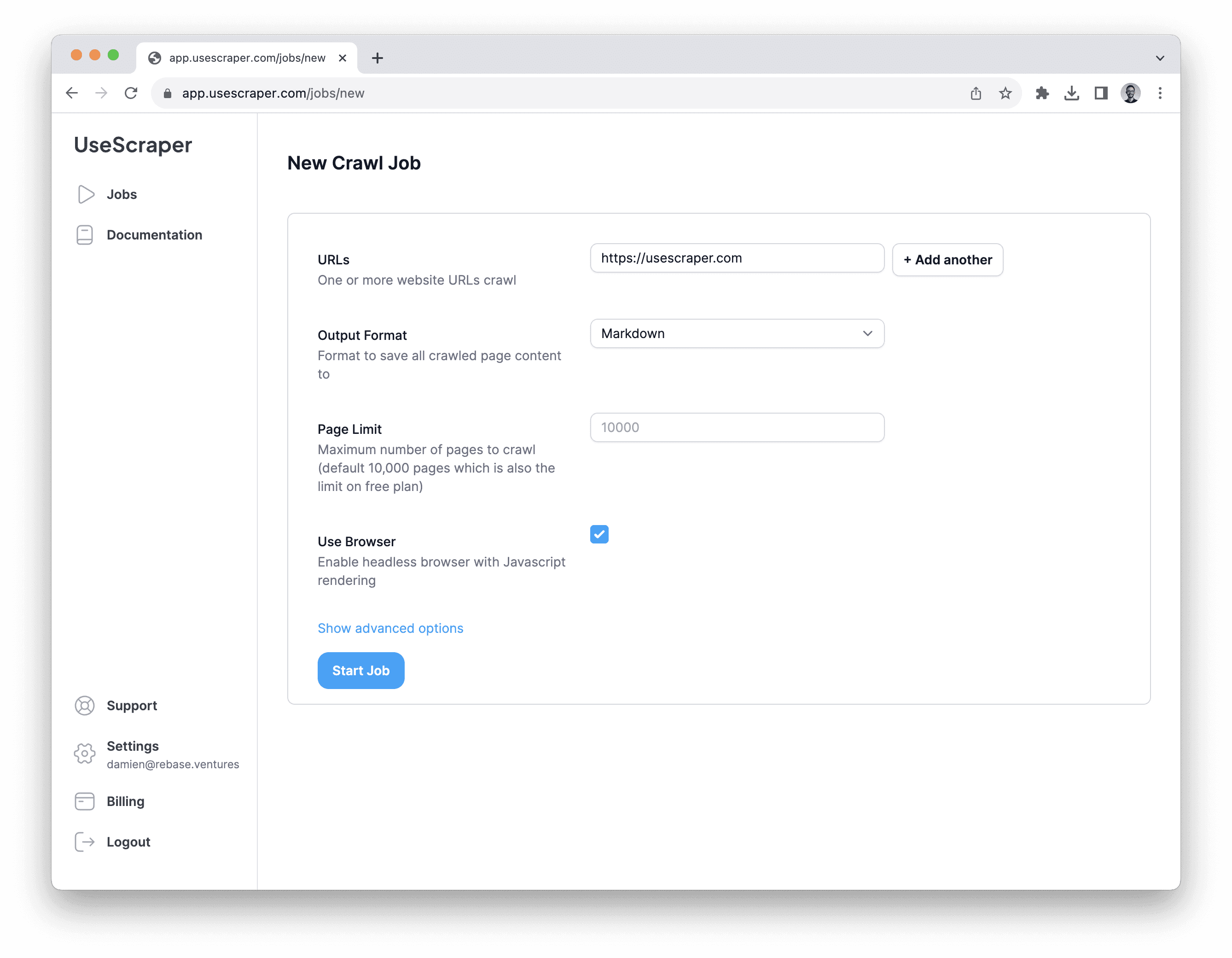

You can start a crawling job simply from the UseScraper dashboard, or via the /crawler/jobs API endpoint. Enter the URL of the website you want to scrape, feel free to input https://usescraper.com as a test. Click "Start Job".

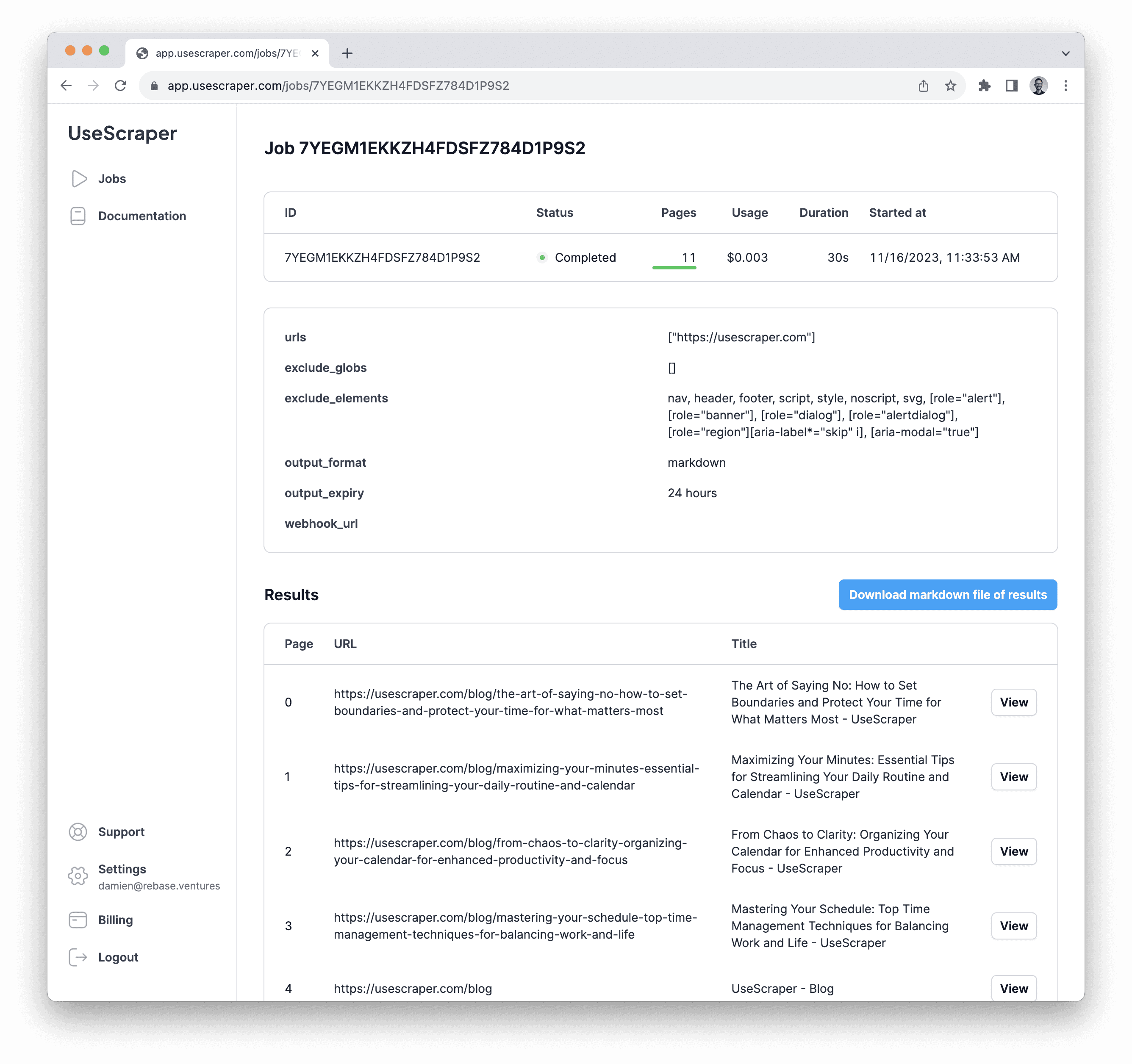

You can then watch the progress of the crawling job live. The results will be shown when it's complete.

Click View next to any page to preview the content scraped. Now click the "Download markdown file of results" button to get all the content as a single markdown file.

Upload the content to your OpenAI GPT

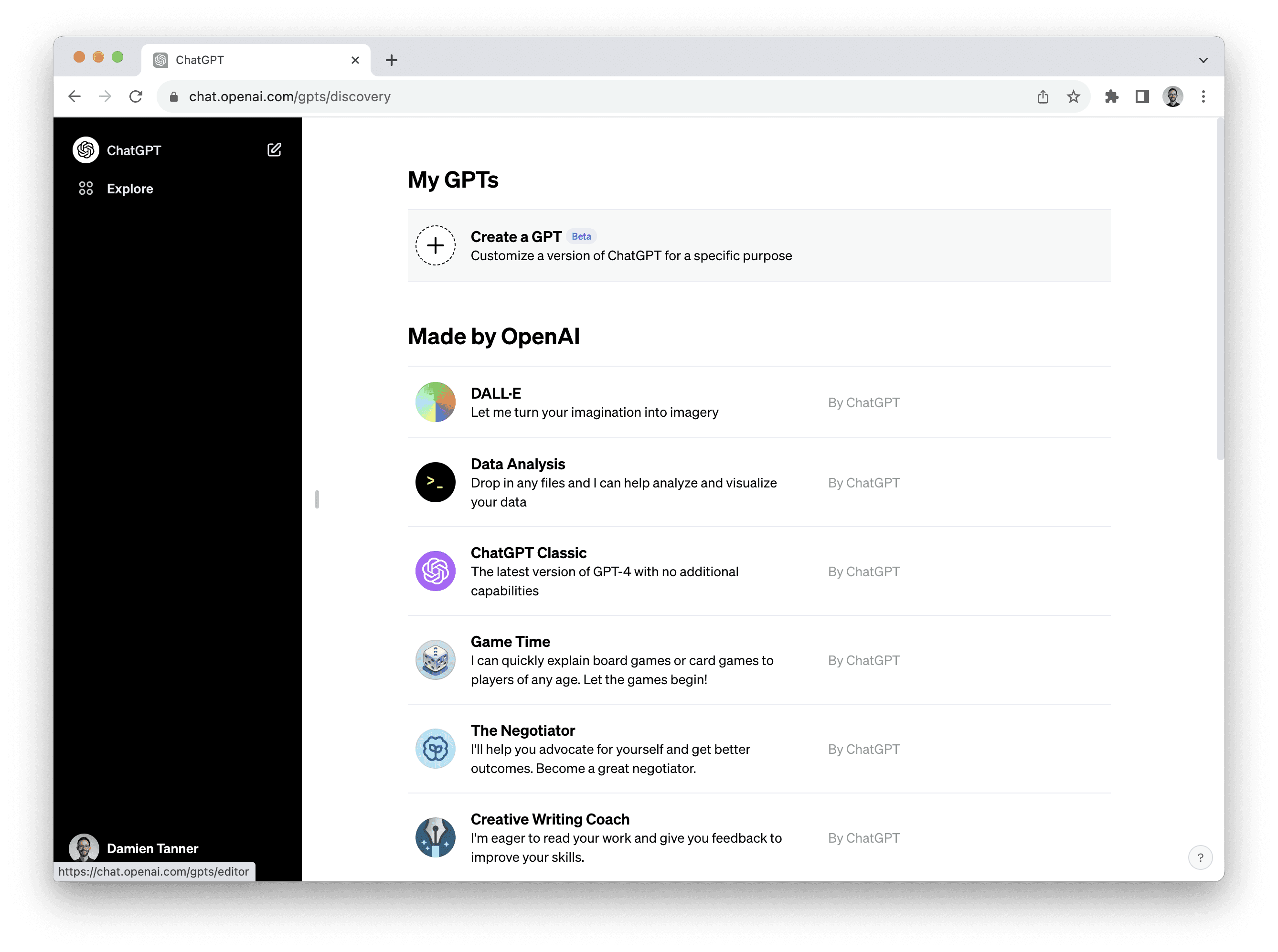

Now we'll upload the markdown file of the website's content to a GPT's knowledge. If you don't already have a GPT created, head to ChatGPT Explore and click "Create a GPT".

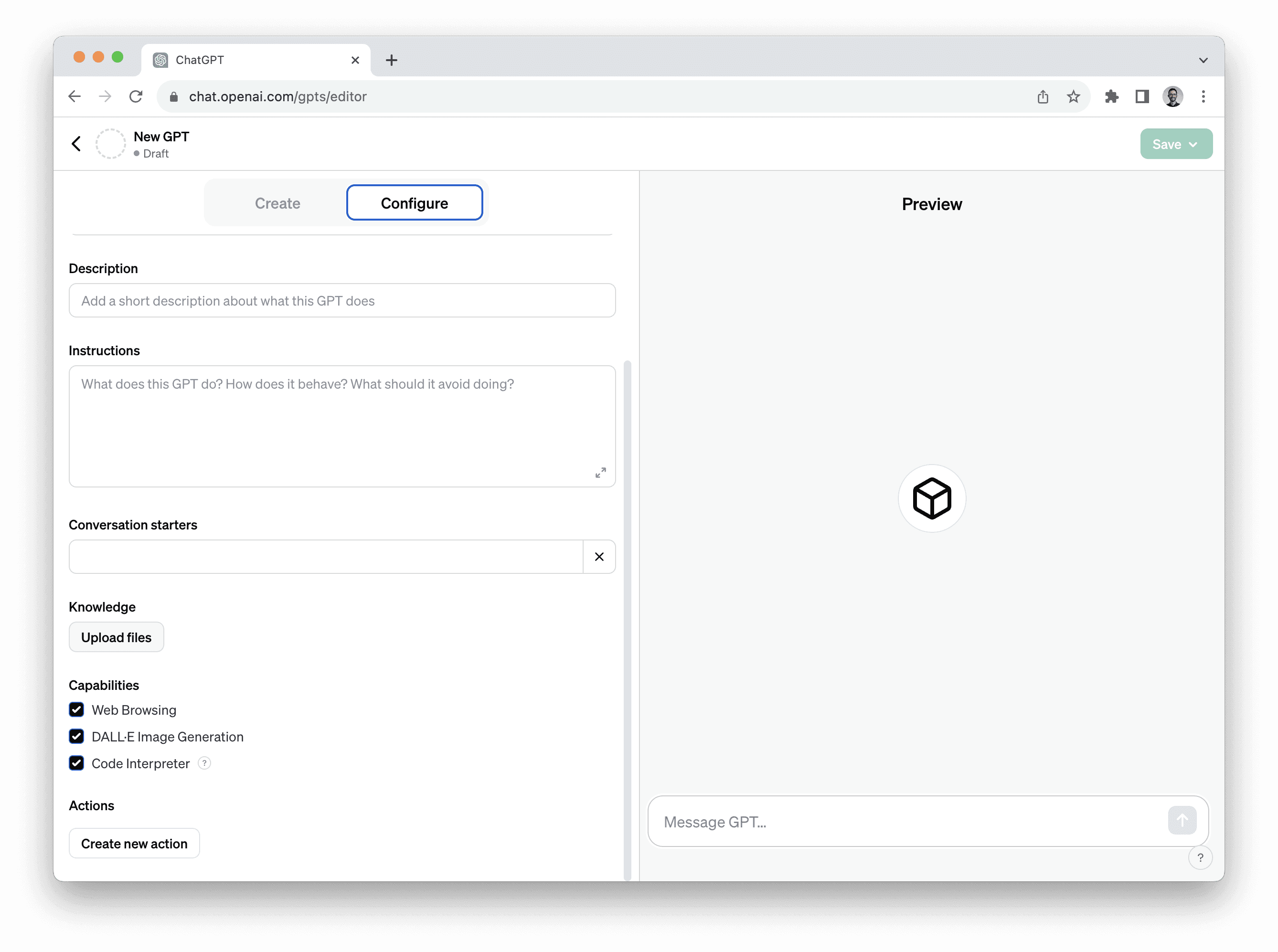

Click the "Configure" tab and scroll down to "Knowledge". Click "Upload files" and select the markdown file you downloaded from UseScraper.

That's it! Now chat with your GPT and you'll see it can use the content from the website you uploaded to answer questions more accurately. You can crawl more websites with UseScraper and upload them to the same GPT and expand its knowledge even more.

Let's get scraping 🚀

Ready to start?

Get scraping now with a free account and $25 in free credits when you sign up.